Medical Diagnosis: Combine medical images (X-rays, MRIs), patient records, and lab reports to improve diagnostic accuracy and recommend personalized treatments.

Telemedicine: Integrate video consultations, text-based medical histories, and audio recordings to enhance remote patient assessments and care.

Entertainment and Media

Content Recommendation: Use data from user interactions (clicks, likes), textual reviews, and media content (audio, video) to provide personalized recommendations on streaming platforms.

Automatic Content Creation: Generate multimedia content, such as video summaries with audio narration and textual captions, by combining text, audio, and video inputs.

Retail and E-Commerce

Virtual Try-On: Combine visual data (images of clothing and users) with text descriptions and user preferences to provide virtual try-on experiences.

Customer Support: Integrate text-based chat logs, audio conversations, and visual data (screenshots, product images) to improve the efficiency and effectiveness of customer support.

Autonomous Vehicles

Perception Systems: Fuse data from cameras, LIDAR, RADAR, and GPS to create a comprehensive understanding of the vehicle’s environment and enhance decision-making for navigation and safety.

Driver Monitoring: Combine visual data (driver’s facial expressions, body posture) and audio data (driver’s voice) to monitor driver attention and detect signs of fatigue or distraction.

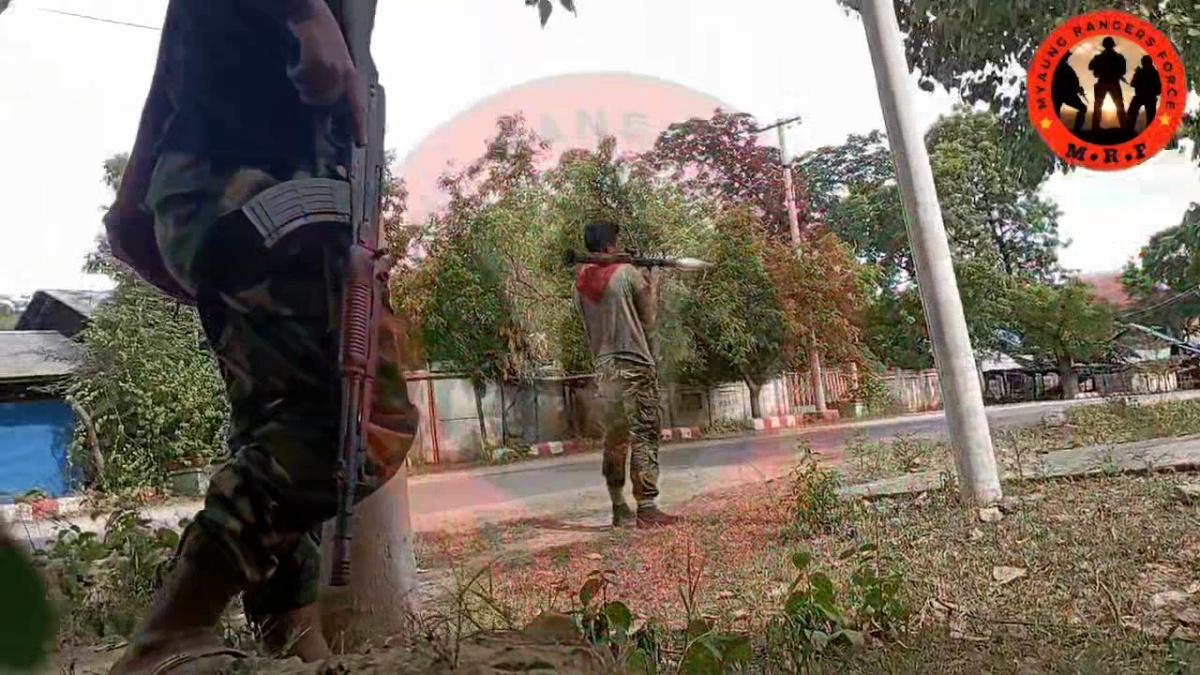

Security and Surveillance

Threat Detection: Integrate video footage, audio feeds, and textual data (e.g., social media posts) to detect and analyze potential security threats in real-time.

Access Control: Use multimodal biometric systems that combine facial recognition, voice recognition, and fingerprint scanning for secure and reliable access control.

Education and E-Learning

Interactive Learning: Combine textual content, instructional videos, and audio explanations to create engaging and comprehensive learning experiences.

Performance Assessment: Analyze student interactions, including written assignments, spoken presentations, and video recordings of practical tasks, to provide holistic performance assessments.

Technical Considerations

Data Synchronization: Ensure that data from different modalities is properly synchronized, especially in time-sensitive applications like video analysis and autonomous driving.

Model Complexity: Design architectures that balance model complexity and computational efficiency, as multimodal systems can be resource-intensive.

Interpretability: Develop methods to interpret and explain the decisions made by multimodal AI systems, which is crucial for applications in healthcare and security.

Conclusion

Multimodal AI systems leverage the strengths of various types of data to create more robust and capable AI applications. By integrating information from text, images, audio, and video, these systems can perform complex tasks with higher accuracy and contextual understanding, opening new possibilities across numerous domains.

Leave a Reply